© Laurenz Albe 2025

© Laurenz Albe 2025

Table of Contents

Everybody wants good performance. When it comes to the execution of SQL statements, accurate optimizer statistics are key. With the upcoming v18 release, PostgreSQL will preserve the optimizer statistics during an upgrade with dump/restore or pg_upgrade (see commit 1fd1bd8710 and following). With the beta testing season for PostgreSQL v18 opened, it is time to get acquainted with the new feature.

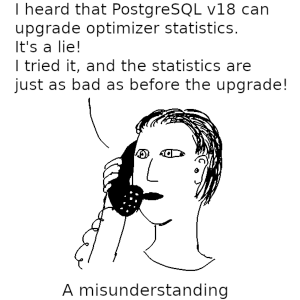

First, let's clear up a frequent source of confusion. We use the word “statistics” for two quite different things in PostgreSQL:

pg_stat directory. You can access these data through the pg_stat_* views.ANALYZE gathers these data and stores them in the catalog tables pg_class, pg_statistic and pg_statistic_ext_data. These data allow the query planner to estimate the cost and result row count of query execution plans and allow PostgreSQL to find the best execution plan.I'll talk about the latter kind of statistics here, and I call them “optimizer statistics” (as opposed to “monitoring statistics”).

Before PostgreSQL v18, there was no way to preserve optimizer statistics across a (major) upgrade. That was no big problem if you upgraded using pg_dumpall — loading the dump will automatically trigger autoanalyze. Waiting for the statistics collection to finish constitutes a additional delay until the database is ready for use. However, dump/restore is slow enough that that additional delay doesn't really matter much. At least you need no manual intervention to gather the statistics!

The situation with pg_upgrade was considerably worse. pg_upgrade dumps and restores only the metadata from the catalog tables and leaves the data files unchanged. pg_upgrade doesn't trigger autoanalyze, so you needed to ANALYZE the new database after the upgrade to get decent query performance. The additional delay used to be particularly annoying, since pg_upgrade is supposed to be a fast upgrade technique.

Under the hood, pg_upgrade uses the “hidden” option pg_dump --binary-upgrade to extract the metadata from the old database cluster. Because of that connection, adding optimizer statistics to pg_dump made it easy for pg_upgrade to adopt the same enhancement.

pg_dump options for dealing with optimizer statisticsBy default, pg_dump does not export optimizer statistics. You have to use the option --with-statistics to make it export the optimizer statistics.

PostgreSQL dumps optimizer statistics for tables, table columns and expression indexes. However, it won't dump the data for extended statistics (only the object definition). So if you are using extended statistics, you should explicitly ANALYZE all tables using them. Otherwise, your SQL statements may not perform as good as they should.

PostgreSQL v18 adds a new option to vacuumdb that is specifically designed to deal with that shortcoming. You should run the following after an upgrade with pg_upgrade:

|

1 |

vacuumdb --all --analyze-only --missing-stats-only |

This command will collect only those optimizer statistics that are still missing. After an upgrade that will be the extended statistics. Using --missing-stats-only, you can be ready to roll in the shortest possible time. Thanks to Bruce Momjian for telling me about that new feature!

Perhaps some future release of PostgreSQL will also preserve extended statistics during an upgrade. But even without that, it will be much faster to ANALYZE a few tables than to recalculate statistics for the whole database.

I should also mention that PostgreSQL v18 does not preserve the monitoring statistics during an upgrade. The most important implication of missing monitoring statistics is that autovacuum and autoanalyze lose their memory: after an upgrade, they don't know which tables need VACUUM or ANALYZE. So it can be a smart idea to trigger a manual VACUUM (ANALYZE) on your busy, large tables at some point after an upgrade to avoid bloat and bad optimizer statistics. Perhaps a future release of PostgreSQL will be able to preserve these data as well during an upgrade.

PostgreSQL v18 dumps the statistics for a table as follows:

|

1 2 3 4 5 6 7 8 9 |

SELECT * FROM pg_catalog.pg_restore_relation_stats( 'version', '180000'::integer, 'schemaname', 'myschema', 'relname', 'tab', 'relpages', '443'::integer, 'reltuples', '100000'::real, 'relallvisible', '443'::integer, 'relallfrozen', '443'::integer ); |

The exported statistics for a table or index column look like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

SELECT * FROM pg_catalog.pg_restore_attribute_stats( 'version', '180000'::integer, 'schemaname', 'myschema', 'relname', 'tab', 'attname', 'col', 'inherited', 'f'::boolean, 'null_frac', '0'::real, 'avg_width', '4'::integer, 'n_distinct', '-0.74276'::real, 'most_common_vals', '{352,616,709,137,153,176}', 'most_common_freqs', '{0.0003,0.0003,0.0003,0.00023333334,0.00023333334,0.00023333334}', 'histogram_bounds', '{1,139,248,359,474,578,685,794,897,1021,2173,3217,4259,5413,6582}', 'correlation', '0.8223619'::real ); |

The system functions pg_restore_relation_stats() and pg_restore_attribute_stats() write the data into the catalog tables pg_class and pg_statistic.

With any feature there are some people who find creative ways to use it in ways it was not intended. I bet there are people who are happy that they finally have a way to force query execution plans: simply take the optimizer statistics from a system where the execution plan looks like you want and import the statistics from that system.

I think that is an ugly approach. First, you are lying to the optimizer, which might come to bite you when running other statements. Second, it is tricky to keep autovacuum from ANALYZEing the table and overwriting your carefully crafted statistics. There is no way to disable autoanalyze other than disabling autovacuum as a whole, and that is a rather bad idea. The best you can probably do is to set autovacuum_analyze_scale_factor to 100.0. Then you can trigger a daily job that analyzes the table and imports the statistics in the same transaction.

An idea how to make that trick work more easily and reliably if autovacuum_analyze_scale_factor were allowed to be set to -1 to disable autoanalyze (similar to how that works for autovacuum_vacuum_insert_threshold). I am not sure how I feel about that though: on the one hand, it is an ugly hack. On the other hand, it might sometimes be the only solution to force a certain query execution plan.

The ability to upgrade optimizer statistics along with the rest of the database, new in PostgreSQL v18, can greatly reduce the down time during an upgrade with pg_upgrade. You might also use it to “forge” optimizer statistics in order to force certain execution plans.

In PG 18, "vacuumdb --missing-stats-only" is an easy way to update only the missing statistics, which for now means expression indexes.

Oh great, thanks, I wasn't aware of that.

I'll add it to the article.