Table of Contents

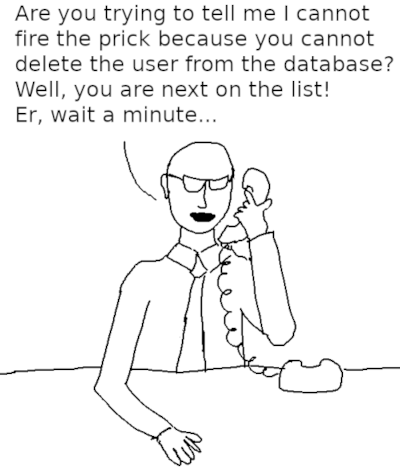

You might, at first glance, believe that DROP ROLE (or DROP USER, which is the same) is a simple matter. However, that's not always the case. So I thought it might be a good idea to show you the problems involved and how to solve them.

DROP ROLE ... CASCADE?PostgreSQL tracks dependencies between objects in the system catalogs pg_depend and pg_shdepend. Sometimes, dropping an object automatically drops other associated objects: for example, dropping a table will drop all indexes and triggers associated with the table. In other cases, you get an error if you drop an object with dependencies: for example, you can't drop a schema that still contains other objects.

The usual way to automatically drop all dependent objects is to use the CASCADE option. For example, “DROP SCHEMA schema_name CASCADE” will automatically try to drop all objects in the schema. So it comes as a surprise that there is no CASCADE option for DROP ROLE. Now what is the reason? Consider these facts:

As a consequence, PostgreSQL cannot guarantee that a single statement will get rid of all objects that depend on a role. So we can't have a CASCADE option for DROP ROLE that can drop all dependent objects.

DROP ROLE?The best thing you can do is to never drop a role that owns objects or has permissions. That's easy to accomplish, if you use “group roles” to carry permissions and ownership, and if you make sure that roles that might be dropped are members of these group roles. That way, the roles inherit all privileges, and you can still drop them at any time. For example, you could have a role “readonly” that has read permissions on everything, and if you temporarily need a role with read access, you just make that role a member of readonly.

Another advantage of using group roles is that you don't have to grant the same permissions to several roles. Not only is that cumbersome and error-prone, but it also inflates the “access control lists” on the objects.

DROP OWNED BY and REASSIGN OWNED BYPostgreSQL has a utility command DROP OWNED BY that drops all objects owned by a role in the current database. It also revokes all permissions granted to the role in the current database. So you can get rid of most objects with dependencies to a role by connecting to all databases in turn and running “DROP OWNED BY role_name” in each of them.

Note that this may still fail: some of the objects that DROP OWNED BY wants to drop might have dependencies to objects owned by other roles, which prevent PostgreSQL from dropping them. In that case, you can use “DROP OWNED BY role_name CASCADE” to have the DROP cascade to these dependent objects.

An alternative to dropping the objects is to change their ownership to a different role. You can do that in bulk with REASSIGN OWNED BY. Again, this only affects objects in the current database, so you have to issue REASSIGN OWNED BY in all databases with objects owned by the role you want to get rid of. Note: REASSIGN OWNED BY does not modify or remove permissions granted to the role, so you may want to follow it with DROP OWNED BY to revoke those permissions.

DROP OWNED BYThere are only two kinds of objects that might be left behind by DROP OWNED BY, both of which are not part of any database:

Getting rid of the databases is easy: you connect to database postgres and drop them or change their ownership.

Tablespaces are more difficult: you cannot drop a tablespace unless there are no more objects in that tablespace. This is a similar case to roles, since a tablespace can contain objects from different databases. Consequently, there is also no CASCADE option for DROP TABLESPACE. In practice, you will probably end up changing the ownership of the tablespace to a different role.

postgres”!There is one special role in each PostgreSQL cluster: the bootstrap superuser created during initdb. Usually that role is called “postgres”, but it always has the object ID 10. If you attempt to drop that role or use DROP OWNED BY and REASSIGN OWNED BY on it, you end up with these error messages:

|

1 2 3 |

cannot drop role postgres because it is required by the database system cannot drop objects owned by role postgres because they are required by the database system cannot reassign ownership of objects owned by role postgres because they are required by the database system |

You always need the bootstrap superuser, so you can't remove it. If you don't like the name of the user, you can simply rename it with ALTER ROLE ... RENAME TO .... Object names are only tags in PostgreSQL and you can change them at any time.

With DROP OWNED BY and REASSIGN OWNED BY it is not difficult to get rid of a role (user), even if it has many dependencies. Still better is to avoid the problem from the start by not granting permissions to roles that might get deleted. Use group roles for that!

If you are interested in tracking permissions in a PostgreSQL database, read more about it here in Hans' blog: pg_permissions.

In order to receive regular updates on important changes in PostgreSQL, subscribe to our newsletter, or follow us on Twitter, Facebook, or LinkedIn.

Hi Laurenz.

Could mention that DROP OWNED BY does not scale, because of locks: https://www.spinics.net/lists/pgsql/msg209900.html

Also mentioned in https://www.cybertec-postgresql.com/postgresql-you-might-need-to-increase-max_locks_per_transaction/ in a different context.

Sure it scales; all you have to to is increase

max_locks_per_transactionso that you have a lock table that is big enough to contain a lock on all objects you want to drop.Not when the DROPed owner can own an arbitrary number of SCHEMAs.

I.e. there is no number one can come up with to cover that situation.

max_locks_per_transactionhas a theoretical upper limit of 2147483647, and you'd have to multiply that bymax_connectionsto get the size of the lock table that is allocated.That is a theoretical limit, since you'd go out of memory there.

But I seriously doubt that you have so many objects in all your schemas that a lock per object won't fit inside your RAM.

I'm referring specifically to this 22-posts thread: https://www.spinics.net/lists/pgsql/msg209900.html

In case that makes it clearer. I got the impression from that thread setting a too-high limit wasn't really an option.

Yes, setting a huge

max_locks_per_transactionis not a great idea, because that memory is allocated on server start and won't be freed until you stop PostgreSQL. But I bet that it doesn't require all your RAM, and there is always the option to crank up the parameter very high just for that one command, restart, executeDROP OWNED, reduce the parameter and restart again.To get an upper limit for the number of locks required, run

SELECT count(*) FROM pg_class;. Divide that value bymax_connectionsand use it formax_locks_per_transaction.