By Kaarel Moppel - Last week the first release candidate of the upcoming Postgres version 11 was released, stating that everything is going more or less as planned – great news! The 11 release is promising a lot of very cool stuff as usual, including – better partitioning, more parallezation and JIT capabilities (which are not activated by default though). This blog is about testing the query performance of v11.

Besides those headline features, there are hundreds or rather thousands (git diff --shortstat REL_10_5..REL_11_RC1 says "3192 files changed, 324206 insertions, 178499 deletions") of smaller changes, and some of them for sure boosting performance, also on some typical queries. Release notes didn't state any numerical performance indicators though as usually, so people have to find it out on their own – so that's what this blog post is about. I'm basically running 4 quite simple analytical/aggregate type of queries and laying out the numbers for you to evaluate – so jump at the summarizing table end of the post or get into the details by reading on.

I carried over 3 out of 4 test queries from the last year's similar test for 9.6 vs 10 (check it out here if you're into archaeology) and added one query to test index traversing performance. Queries are all tied to the schema generated by our good old friend pgbench, with one minor adjustment – creating a copy of the pgbench_accounts table be able to simulate a join on two 1 million rows tables. This time I also didn't disable the parallel features as they're "on" by default for both versions and thus probably won't be touched for most setups.

|

1 2 3 4 5 6 7 8 9 10 11 |

/* Sum up 50mio rows */ SELECT sum(abalance) FROM pgbench_accounts CROSS JOIN generate_series(1, 5) /* Unique columns joining */ SELECT count(*) FROM pgbench_accounts JOIN pgbench_accounts_copy using (aid) /* Using Grouping Sets analytical feature */ SELECT count(*) FROM (SELECT aid, bid, count(*) FROM pgbench_accounts GROUP BY CUBE (aid, bid)) a; /* B-tree index traversing */ SELECT COUNT(DISTINCT aid) FROM pgbench_accounts where aid % 2 = 0; |

For pbench "scale" I chose 100, meaning 10 Million rows (1.3GB) will be populated into the pgbench_accounts table. This gives enough rows but still fits into memory so that runtimes are more predictable.

I spun up a lower end node on Linode, labelled "Linode 8GB" (4 cores, 8GB RAM, 160 GB SSD, Ubuntu 18.04) but because we're not testing hardware, but comparing 2 Postgres versions, it should be fine. Also, even though we've got an SSD, the disk doesn't really matter here, as it's a read-only test and I've chosen queries and the test data amount in a way that everything will fit into RAM. This is giving us an algorithmic comparison. For additional testing fairness my test script also performed queries in turns between 10.5 and 11 RC1 to try to suppress the effects of possible background load jitter of a shared virtual machine.

For running Postgres I used official the official Postgres project maintained repo for both 10 and 11. Concerning server settings I left everything to defaults, except below changes on both clusters for reasons described in comments.

|

1 2 3 4 5 |

shared_buffers='2GB' # to ensure buffers our dataset work_mem='2GB' # increase work_mem as it helps a lot for analytical stuff max_parallel_workers_per_gather=2 # the default actually, so just as a reminder shared_preload_libraries='pg_stat_statements' # for storing/analyzing test results logging_collector=on |

After running my test script (6h on both clusters), which basically does all the work out of the box and can be found here, I got the numbers below.

| Query | Instance | Mean time (s) | % Change | Stddev time (ms) | % Change |

|---|---|---|---|---|---|

| SUM | 10.5 | 1.54 | 32.5 | ||

| SUM | 11RC1 | 1.39 | -9.7 | 24.2 | -25.5 |

| JOIN | 10.5 | 3.75 | 74.5 | ||

| JOIN | 11RC1 | 3.84 | +2.3 | 72.0 | -3.5 |

| CUBE | 10.5 | 15.0 | 852 | ||

| CUBE | 11RC1 | 9.58 | -56.6 | 542 | -57.2 |

| INDEX | 10.5 | 3.23 | 199 | ||

| INDEX | 11RC1 | 2.16 | -49.5 | 167 | -19.2 |

So what do these numbers tell us? 2 queries out of 4 have improved very significantly! +50% is very good, given how refined Postgres algorithms already are. The INDEX query can be easily explained by the fact that previously index access could not be parallelized. For the CUBE (grouping sets feature) I didn't find anything from the release notes. It would be interesting to know though so please comment if you have some insights. The other 2 queries are "on par" and the small 2.3% JOIN loss (although latencies are more consistent now) is being sufficiently compensated for on other fronts.

To sum it up – the general impression from 11 RC1 is very positive. The numbers look much better compared to last year's improvements. So hopefully it will be an awesome release and a relief for those who have to deal with tons of data - personally I'm itching to upgrade some systems already:)

NB! Not to forget – these are just "some" queries that I conjured up and it's also a RC release so although it looks very promising I wouldn't take those numbers as a guarantee for anything outside of the described test set.

By Kaarel Moppel: After a recent chat with a DBA who was taking his first steps towards migrating a big database away from Oracle to Postgres, I thought there are not enough articles on PostgreSQL features and "hacks" to achieve scalability. People want to know how to squeeze the last out of the hardware and safely accommodate decent multi-terabyte size databases. Since there are quite a few options out there, I was very surprised that there is so much fear that PostgreSQL is somehow very limited as far as scaling goes. Maybe it used to be so, (I started with Postgres in 2011), but in the year 2018, things are pretty solid. So please do read on for some ideas on how to juggle terabytes with ease.

If you don’t like to sweat too much or do any pioneering, then the safest way to scale would be to stick with proven out-of-the-box features of Postgres - so first, I’d recommend you take a look at the following keywords with some short explanations. Maybe that's all you need.

For a complex OLTP system, supporting hundreds of freaky queries, it is very common that indexes actually take much more disk space than the table files holding the data. To improve that (especially for indexes that are used infrequently) you can drastically reduce the index sizes with appropriate use of partial, BRIN, GIN or even a bit experimental BLOOM indexes. In total, there are 7 different index types supported. Most people only know about and use the default B-tree – a big mistake in a multi-TB setting!

Partial indexes only allow a subset of the data. For example, in a sales system we might not be interested in fast access to orders in status “FINISHED” (some nightly reports usually deal with that, and they can take their time), so why should we index such rows?

The most commonly known non-default index type perhaps, has been around for ages (full-text search). It's perfect for indexing columns where there are a lot of repeating values. Think of all kinds of statuses, or good old Mr/Mrs/Miss. GIN only stores every unique column value once, as compared to the default B-tree, where you’ll have e.g. 1 million leaf nodes with the integer “1” in them.

(block-range a.k.a. min-max index) on the other hand, is something newer and very different. It’s a lossy index type with a very small disk footprint, where not all column values are indexed, but only the biggest and smallest values for a range of rows (1 MB section of a table by default). This still works very well on ordered values. It's perfect for time series data or other “log” types of tables.

might be exotic, but if you manage to find a good use case (“bitmap/matrix search”) for it, it can be up to 20x more efficient than traditional indexing. See this blog on trying out Postgres bloom indexes for an example use case, if it seems too abstract.

But why did I place the somewhat unoriginal topic of indexing on the top of the list? Because the big advantage of this solution is that you don’t need any application changes. The DBA can easily make it work behind the scenes, in a one-time effort! Perfect.

Postgres has been partitioning for 15 years I believe...but kind of in a “get your hands dirty” way. You had to do some low-level management of attaching partitions, adding check constraints and inserting rows to correct child tables directly. Or, you had to route them there via insert triggers on the parent table. All this is history starting from Postgres version 10; from then on, there is declarative partitioning. It gets even better with version 11, where the functionality can be called feature-complete, with overarching indexes enabling primary and foreign keys.

Why bother? Well, the advantages of partitioning are: it’s possible to cleanly separate “cold data” and “hot data”. That gives us some nice options like compacting old data maximally with VACUUM FULL or placing it on another media (see “tablespaces” below). As a side effect we have smaller indexes, which take a lot less space in the shared_buffers, so we have more space for data there. The effect is biggest on uniformly accessed data scenarios (by name/email/hash) where all parts of big indexes still need to be traversed/read/cached, but only a tiny percentage of them is actually used. Also similar to indexes, under favorable application conditions, partitioning can be implemented without any code changes by DBA's in the background.

As mentioned above – it is possible to move tables/ indexes selectively to various disk media with the help of tablespaces. Here you can achieve many different goals – save money by using slower/ affordable disk partitions for “cold” data, keep only the most recent/important data on fast/expensive media, use some special compressed file systems for data that has a lot of repetitions, or use some network shares or even in-memory file systems on remote nodes for massive non-persistent data. There are quite a few options. Management of tablespaces is also quite straightforward, however: the transfer of existing tables / indexes during live operation can be problematic due to full locking.

Starting from Postgres 9.6, it is possible to parallelize some common operations on data. In Postgres 10/11 the relevant parameter max_parallel_workers_per_gather is also enabled by default with the value of 2, thus max. 2 background processes used. For “Big Data” use cases, it might make sense to increase it even more (and also increase some related parameters). Also, it is to be expected that the support for operations that can be parallelized increases with every new major release, as it has so far been. The upcoming version 11, for example, can now do parallel hash joins, index scans and UNION-s.

Here we’re moving out of the “single node” or “scaling up” realm...but given the very reasonable hardware prices and availability of usable Postgres cluster management software (Patroni being our favourite) it is not only for the bigger “shops” but should be doable for everyman. This kind of scaling can of course only be used if you’re mostly just reading data...as currently (and in next years) officially there can only be a single “master/primary” node in a cluster that is accepting writes. Also, going down this road you’ll probably have to tackle some technical details (especially connection routing) depending on your selected technical stack, but actually Postgres 10 did add support for the multi-host use case on the driver level – so the batteries are included! See here for more.

Also, starting with Postgres 9.6 the replicas can be run in “mirrored” mode, so that it does not matter which node you’re running your SELECT on! As a friendly warning – this works well only if the read queries are purely OLTP (i.e., very fast).

So now we’re done with the conventional stuff...but if you’re ready to step off the beaten path and make slight adjustments to your application, or try out some funnily-named extensions, you could well squeeze out the last drop of performance from single-node hardware. Here’s what I mean:

What I call hybrid tables are actually based on Postgres’ excellent SQL MED standard implementation, also known as Foreign Data Wrappers. They basically look like normal Postgres tables for read queries, but the data might reside or be piped over from literally anywhere. It might be coming from Twitter, LDAP or Amazon S3, see the wiki on fdw's for the full list of crazy datasources supported. In practice, the most used application of Foreign Data Wrappers (FDW-s) probably makes normal (correctly formatted) files look like tables. For example, exposing the server log as a table to make monitoring easier.

Where’s the scaling part, you may ask? The FDW approach works very well in the sense that it enables you to reduce the amount of data by using some clever file formats or compression. That typically reduces the data size 10-20x, so that the data would fit on the node! This works very well for “cold” data, leaving more disk space/cache available for real tables with “hot” data. As of Postgres v10 it is also very easy to implement – see some sample code here.

Another very promising use case is to use the columnar data storage format (ORC). Take a look at the “c_store” extension project for more info. It’s especially suited to scaling large data warehouses, with tables up to 10x smaller and queries up to 100% faster.

Why didn’t I add this feature to the above “Standard Postgres facilities” section, since the Foreign Data Wrapper infrastructure is firmly built into Postgres? Well, the downside is that you cannot usually change data via SQL and add indexes or constraints, so its use is a bit limited.

This is pretty much the same info as in the previous point – but brings in table partitioning and has child tables residing on remote nodes! The data could be planted to a nearby Postgres server and pulled in automatically, as needed over the network. Actually, they don’t even have to be Postgres tables! It could well be MySQL, Oracle or MS SQL any other popular server that works well for some subset of queries. Now how cool is that? The best results are to be expected from Postgres-to-Postgres interactions though as only “postgres_fdw” supports all write operations, transactions and clever filter push-downs so that data amounts passed over the wire are minimized.

Happy terabyte-hunting!

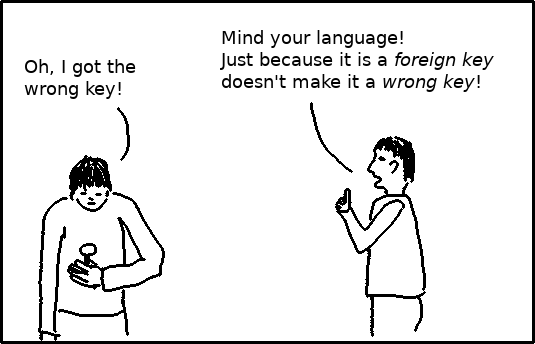

Foreign key constraints are an important tool to keep your database consistent while also documenting relationships between tables.

A fact that is often ignored is that foreign keys need proper indexing to perform well.

This article will explain that and show you how to search for missing indexes.

In the following, I will call the table, on which the foreign key constraint is defined, the source table and the referenced table the target table.

The referenced columns in the target table must have a primary key or unique constraint. Such constraints are implemented with unique indexes in PostgreSQL. Consequently, the target side of a foreign key is automatically indexed.

This is required so that there is always a well-defined row to which the foreign key points. The index also comes handy if you want to find the row in the target table that matches a row in the source table.

In contrast to the above, PostgreSQL requires no index at the source of a foreign key.

However, such an index is quite useful for finding all source rows that reference a target row. The typical cases where you need that are:

1. You perform a join between the two tables where you explicitly search for the source rows referencing one or a few target rows. If there is an index on the columns at the source, PostgreSQL can use an efficient nested loop join.

This is well known and pretty obvious.

2. You delete rows or update key columns in the target table.

Then PostgreSQL has to check if the foreign key constraint is still satisfied. It does so by searching if there are rows in the source table that would become orphaned by the data modification. Without an index, this requires a sequential scan of the source table.

Let's build a source and a target table:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

-- to make the plans look simpler SET max_parallel_workers_per_gather = 0; -- to speed up CREATE INDEX SET maintenance_work_mem = '512MB'; CREATE TABLE target ( t_id integer NOT NULL, t_name text NOT NULL ); INSERT INTO target (t_id, t_name) SELECT i, 'target ' || i FROM generate_series(1, 500001) AS i; ALTER TABLE target ADD PRIMARY KEY (t_id); CREATE INDEX ON target (t_name); /* set hint bits and collect statistics */ VACUUM (ANALYZE) target; CREATE TABLE source ( s_id integer NOT NULL, t_id integer NOT NULL, s_name text NOT NULL ); INSERT INTO source (s_id, t_id, s_name) SELECT i, (i - 1) % 500000 + 1, 'source ' || i FROM generate_series(1, 1000000) AS i; ALTER TABLE source ADD PRIMARY KEY (s_id); ALTER TABLE source ADD FOREIGN KEY (t_id) REFERENCES target; /* set hint bits and collect statistics */ VACUUM (ANALYZE) source; |

Looking up source rows via the link to target and deleting rows from target is unreasonably slow:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

EXPLAIN (ANALYZE) SELECT source.s_name FROM source JOIN target USING (t_id) WHERE target.t_name = 'target 42'; QUERY PLAN ---------------------------------------------------------------- Hash Join (cost=8.45..19003.47 rows=2 width=13) (actual time=0.150..360.920 rows=2 loops=1) Hash Cond: (source.t_id = target.t_id) -> Seq Scan on source (cost=0.00..16370.00 rows=1000000 width=17) (actual time=0.011..155.964 rows=1000000 loops=1) -> Hash (cost=8.44..8.44 rows=1 width=4) (actual time=0.111..0.111 rows=1 loops=1) Buckets: 1024 Batches: 1 Memory Usage: 9kB -> Index Scan using target_t_name_idx on target (cost=0.42..8.44 rows=1 width=4) (actual time=0.105..0.107 rows=1 loops=1) Index Cond: (t_name = 'target 42'::text) Planning time: 0.701 ms Execution time: 360.982 ms (9 rows) EXPLAIN (ANALYZE) DELETE FROM target WHERE target.t_name = 'target 500001'; QUERY PLAN ---------------------------------------------------------------- Delete on target (cost=0.42..8.44 rows=1 width=6) (actual time=0.178..0.178 rows=0 loops=1) -> Index Scan using target_t_name_idx on target (cost=0.42..8.44 rows=1 width=6) (actual time=0.107..0.109 rows=1 loops=1) Index Cond: (t_name = 'target 500001'::text) Planning time: 0.165 ms Trigger for constraint source_t_id_fkey: time=153.804 calls=1 Execution time: 154.030 ms (6 rows) |

After creating the appropriate index:

|

1 |

CREATE INDEX source_t_id_idx ON source (t_id); |

the queries are as fast as they should be:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

EXPLAIN (ANALYZE) SELECT source.s_name FROM source JOIN target USING (t_id) WHERE target.t_name = 'target 42'; QUERY PLAN ---------------------------------------------------------------- Nested Loop (cost=0.85..19.89 rows=2 width=13) (actual time=0.068..0.076 rows=2 loops=1) -> Index Scan using target_t_name_idx on target (cost=0.42..8.44 rows=1 width=4) (actual time=0.048..0.049 rows=1 loops=1) Index Cond: (t_name = 'target 42'::text) -> Index Scan using source_t_id_idx on source (cost=0.42..11.43 rows=2 width=17) (actual time=0.013..0.019 rows=2 loops=1) Index Cond: (t_id = target.t_id) Planning time: 1.238 ms Execution time: 0.147 ms (7 rows) EXPLAIN (ANALYZE) DELETE FROM target WHERE target.t_name = 'target 500001'; QUERY PLAN ---------------------------------------------------------------- Delete on target (cost=0.42..8.44 rows=1 width=6) (actual time=0.135..0.135 rows=0 loops=1) -> Index Scan using target_t_name_idx on target (cost=0.42..8.44 rows=1 width=6) (actual time=0.091..0.094 rows=1 loops=1) Index Cond: (t_name = 'target 500001'::text) Planning time: 0.151 ms Trigger for constraint source_t_id_fkey: time=0.557 calls=1 Execution time: 0.751 ms (6 rows) |

The following query will list all foreign key constraints in the database that do not have an index on the source columns:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

SELECT c.conrelid::regclass AS "table", /* list of key column names in order */ string_agg(a.attname, ',' ORDER BY x.n) AS columns, pg_catalog.pg_size_pretty( pg_catalog.pg_relation_size(c.conrelid) ) AS size, c.conname AS constraint, c.confrelid::regclass AS referenced_table FROM pg_catalog.pg_constraint c /* enumerated key column numbers per foreign key */ CROSS JOIN LATERAL unnest(c.conkey) WITH ORDINALITY AS x(attnum, n) /* name for each key column */ JOIN pg_catalog.pg_attribute a ON a.attnum = x.attnum AND a.attrelid = c.conrelid WHERE NOT EXISTS /* is there a matching index for the constraint? */ (SELECT 1 FROM pg_catalog.pg_index i WHERE i.indrelid = c.conrelid /* it must not be a partial index */ AND i.indpred IS NULL /* the first index columns must be the same as the key columns, but order doesn't matter */ AND (i.indkey::smallint[])[0:cardinality(c.conkey)-1] OPERATOR(pg_catalog.@>) c.conkey) AND c.contype = 'f' GROUP BY c.conrelid, c.conname, c.confrelid ORDER BY pg_catalog.pg_relation_size(c.conrelid) DESC; |

The result is ordered by the size of the table so that the tables, where the missing index hurts most, are listed on top.

all foreign keys?If the source table is small, you don't need the index, because then a sequential scan is probably cheaper than an index scan anyway.

Also, if you know that you never need the index for a join and you will never delete a row or update a key column in the target table, the index is unnecessary.

There is one simple way to proceed: create all missing indexes, wait a couple of days and then get rid of the indexes that were never used.

In order to receive regular updates on important changes in PostgreSQL, subscribe to our newsletter, or follow us on X, Facebook, or LinkedIn.