It's commonly known that setting max_connections to an insanely high value is not too good for performance. Generations of system administrators have followed this rule. Just out of curiosity I thought I'd give it a try to see which impact setting max_connections in PostgreSQL really has.

UPDATE 2023: See also this more recent blog post by Laurenz Albe: max_connections tuning in PostgreSQL

Table of Contents

Again we have used the same hardware to test: 8 AMD cores running at 4 Ghz, 16 GB of RAM, Ubuntu 12.04 as well as a Samsung 840 SSD. Benchmark was running on the box.

To prepare for the test we have created a simple test set using pgbench. The scale factor was set to 100, so that we are running the test using 10 mio rows. Shared buffers are set to 2 GB.

In a first test we used various settings for max_connections - but, we kept the number of actually open database connections constant. In our case we used CONNS=8 and let the read only benchmark run for 20 min:

|

1 |

pgbench -S -j $CONNS -M prepared -T $T $DB -c $CONNS |

The results are pretty constant. Even if max_connections is massively increased in postgresql.conf, the performance curve of PostgreSQL is totally flat. This is somewhat expected.

The number of transactions per second stays constant at 150.000 / second (reads). So, what is the point of setting max_connections to a low value then?

In the next test we want to modify the test setup a little. Again we used 8 working connections running pgbench just like before. But, to make things worse for PostgreSQL we filled up the remaining connections with idle connections. To do so we created database connections running pg_sleep() in background. In short: In case of the 1024 connection test, we had 8 working and 1016 SELECTs running a sleep (= active but idle).

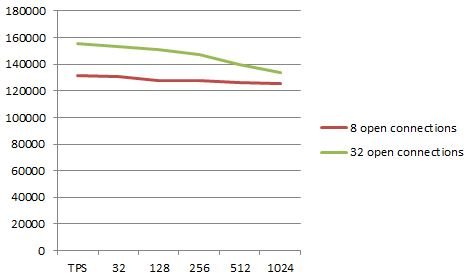

In this case the results start to be interesting. The more connections we got, the lower our TPS count will be. Although the decrease is by no means large, it is still stable and can be observed clearly in the results:

with idle conns (8 workers)

|

1 2 3 4 5 6 7 |

hs@chantal:~/benchmark$ ./run.sh 2> /dev/null 32: tps = 131706.112 128: tps = 130875.520 256: tps = 127894.491 512: tps = 127413.512 1024: tps = 126034.739 2048: tps = 125077.974 |

Let us repeat the very same test with 32 open connections now and see if the change in throughput given more connections is constant or not.

Here are the results:

|

1 2 3 4 5 6 |

32: tps = 155681.582 128: tps = 153041.9681 256: tps = 151295.710 512: tps = 147398.063 1024: tps = 139920.943 2048: tps = 133637.017 |

What we see here is a substantially higher decline (percentage wise). This is somewhat important because the more loaded the system is, the higher the impact is going to be in this case.

To sum up things we got to point out that max_connections alone is not what causes issues. It is more the combination of many idle connections along with many active ones.

In order to receive regular updates on important changes in PostgreSQL, subscribe to our newsletter, or follow us on Facebook or LinkedIn.

Thanks for looking into this. Always great to check how valid "common knowledge" is (or continues to be, with newer PG versions). On that note, which version were you testing here? Would also be interesting to see if totally connections would differ from the SELECT pg_sleep(), as that seems to be a common case if someone isn't using pooling (lots of app instances sitting around, connected to PG, but not doing anything).

In these cases he uses the newest PG version available. At the time of this post it was 9.3.2.

Do you know why the initial TPS is higher with 32 open connections compared to 8 open connections? I would assume the green curve should already be equal or lower than the red curve, and then decline more rapidly.

i would assume that the time needed to send stuff back and forth (pgbench postgres) cannot be used productively to execute stuff. it seems to be better if every CPU core has a moderate number of concurrent clients.

That's great to know that having a lot (say, 500) idle connections doesn't really hurt performance that much. I thought the difference was much higher but never bothered to check.