by Kaarel Moppel

Table of Contents

Pretty often I’m asked: Does our PostgreSQL monitoring tool pgwatch2 work with managed cloud database service providers like Google Cloud? Well, the short answer would be, “Yes, we scan!”. But as always the devil is in the details. You should be aware of a couple of nuances/extra steps.

In order to shed some light on the topic and to document the process a bit, I’ve put together a small “how-to”. Here's how to use pgwatch2 with Google Cloud’s PostgreSQL offering, as an example of a managed database service.

A small disclaimer though - I haven’t personally tried out all the newest/more niche cloud providers. So I wouldn’t give a 100% guarantee on that promise, but rather a 99.9% one 🙂

And if the name pgwatch2 doesn’t sound too familiar, it’s one of the most popular Open Source Postgres-specific monitoring tools out there! It supports many flexible deployment options, metric fetching features, and data stores. More info about the project can be found in the official documentation. If you prefer looking at the code, then jump right to Github.

But before we go hands-on: What does a managed database service, like Google Cloud SQL's offering, actually consist of? Why wouldn't it work?

For those who don't work with the ins and outs of database systems daily, it might not be so apparent. In most cases, the thing that you get after clicking “$mydb > Next > Next > Launch” or its equivalent is actually very close to a vanilla PostgreSQL instance! In other words, you're getting almost the same thing that you would get when typing apt install postgresql on your server or workstation, at least in standard SQL matters of schema and data management, i.e. for DDL and DML.

The biggest difference (apart from the good things like automatically taking care of high availability, backups, and updates) is actually the fact that you’re being stripped of security-sensitive features, like not being handed a real superuser account with the ability to execute arbitrary commands on the operating system level! Which is a scary thing I must agree 🙂 Read more about that here if you’re not quite sure what I mean. But there are also other aspects, like the removal of advanced configuration features. So basically the limitations could be summarized within a few groups:

Obviously, it's well justified to disable arbitrary command execution with COPY PROGRAM or archive_command — but this also includes no file system access via pg_ls_dir(), etc. That would hint at implementation details or provide extra information for possible attack vectors to break out of the so called “sandbox”.

Here the reasoning mostly is that you should not shoot yourself in the foot and act in accordance with the booked hardware resources. But there are also some more sneaky considerations like for example nudging you towards vendor lock-in, by disabling PostgreSQL’s built-in Logical Replication that basically can enable relatively painless, near-zero downtime, migrating to another service provider or to a self-managed full-featured Postgres instance. Feel free to read more about it here, if the concept seems new to you.

This covers limiting/controlling both the pg_hba.conf infrastructure so that for example SSL is always required or access is guaranteed to be possible only from within that cloud account, and also aspects like limiting the “pseudo-superuser” so that, by default, it does not even see other people’s running queries.

All cloud providers, including Google Cloud, only allow a short whitelist of pre-approved extensions to be activated, mostly those extensions from the official “contrib” package, since it’s a lot of work to both review the code and guarantee that it’s stable and secure. This is of course understandable, but at the same time, also the most annoying thing about the managed services for me personally as there are dozens and dozens of great PostgreSQL extensions out there and this extensibility infrastructure is the key factor to PostgreSQL’s constantly rising popularity.

But then again it’s not all bad — there are the other aspects that, especially for more casual or novice database users, make them a good choice: hiding complexity like HA/failover; backups; server configuration; and providing some basic metrics.

Since all managed service providers also provide some kind of performance metrics and dashboards out of the box, which in the case of Google’s offering actually looks pretty decent (it runs under the name of “Query insights”), the big question is - why should one use an extra tool at all?

Well, I can think of some good reasons:

There’s probably some truth to the old idiom - “You can't teach an old dog new tricks”. People usually don’t want to constantly work with new tools that are only marginally better than old ones. The usefulness is in the end somewhat limited by what the Postgres engine can offer on the instrumentation side.

What if your company uses many different cloud database service providers in addition to running some self-managed ones? Do you really want to maintain the mental overhead of knowing all the details of the different providers and translating between different vocabularies? Cloud providers obviously have not managed to agree on a common glossary. I haven't yet mentioned constantly logging into different environments, or searching for the correct browser tab. Having a single tool with all the metrics from different systems is obviously a better choice.

What if your development team is highly segregated for security reasons? And doesn’t have common access to the graphs/API that the service provider offers? What if you have some freelance consultants on your team and you only want to expose a relevant subset of the metrics to them? In such cases, it might be better to collect, manage and expose metrics according to your own rules.

Since managed services have a huge user base, they must generally be implemented in a pretty simplistic way. People that are not database experts need to be able to jump right in and get going in minutes. This again might not be optimal for database professionals who want to see more detailed information.

What if your cloud provider restricts your ability to adjust the frequency of background metrics collection? What if you prefer to visualize your data in a specific format, such as tables instead of graphs? Additionally, what if you want to gather all the metrics that PostgreSQL provides? You'll find you have much more flexibility with 3rd party tools.

One area where the cloud service providers for some reason have not yet done their best is in making it easy to set up basic alerts. Providing a flexible as well as an easy-to-use interface, similar to what Grafana offers, would definitely be a boon for “cloud migrants”.

All this positive stuff being said, nothing is perfect, sadly 🙂 External tools also have one huge downside when it comes to monitoring managed databases – we don’t have convenient access to the OS-level metrics! Luckily, this data is mostly available somewhere in the stomach of the behemoth. It can be extracted via some kind of API, but you generally need to write custom code for that. As an alternative, with some tools like Grafana (which supports mixed datasource graphs), it can be also integrated to be fetched on the fly. There may be some extra lag. But then again, retention policies could still become a problem, if you’d like to occasionally look at data that is many months old.

In short – having options is a good thing and I would generally not want to just rely on my cloud provider knowing what is best for me. At least for more important databases.

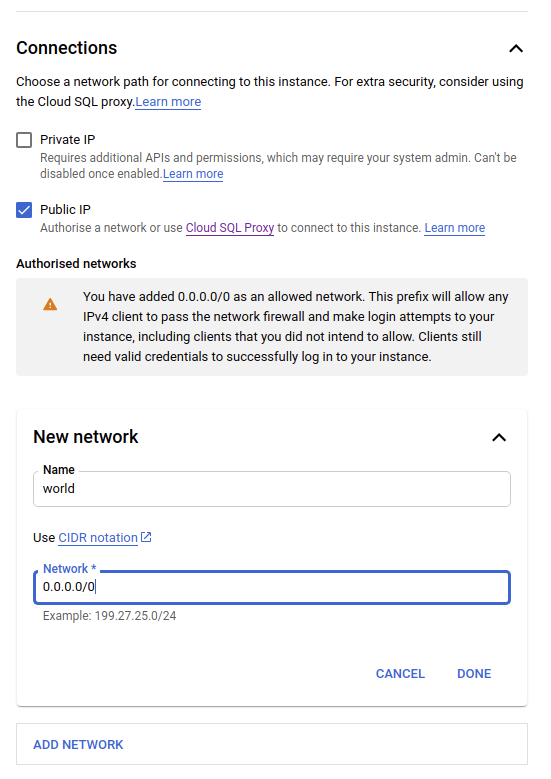

Initially, I thought about skipping this section... as the GUI is actually quite simple and neat, and things should be self-explanatory. However, there were a couple of configuration sections where I had to pause for a bit and think, so I’ll post screenshots about those.

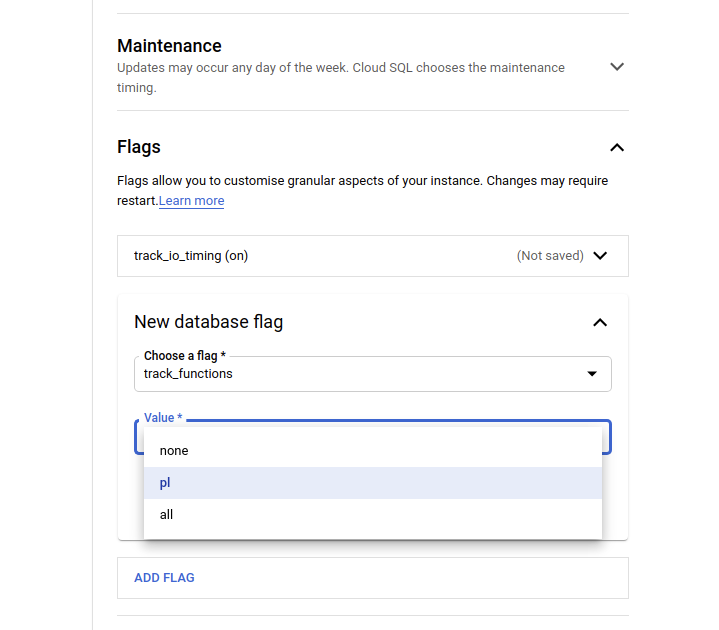

The thing is that all the biggest cloud providers provide a multitude of managed database engines. In an attempt to unify the vocabulary, they might have opted for some wording that in PostgreSQL's case is not obvious. For example “Flags” mean PostgreSQL server configuration parameters!

Note that I’m not covering the prerequisite part about setting up an account and hooking up a credit card, etc.

Due to the fact that we are only interested in monitoring here, we’ll also need to add an extra step. We need to create a monitoring role to be used by the pgwatch2 metrics collector. It’s never recommended to monitor with the default admin/superuser account over a remote connection! Luckily, starting from v10, the Postgres project caters for that common monitoring need. There’s a special system grant called pg_monitor for that purpose. pg_monitor elevates the privileges just a bit. The steps below are listed in the documentation and also on Github's mini-how-to.

|

1 2 3 4 |

CREATE USER pgwatch2 WITH PASSWORD 'secret'; GRANT pg_monitor TO pgwatch2; -- pg_monitor is a special system role for metrics access |

I'm skipping the actual installation of pgwatch2 here. Please follow the documentation for that. Basically, once we have the metrics agent and the metrics storage DB in place (Postgres with TimescaleDB recommended!) we just need a simple YAML config file.

As I already mentioned in the introductory section, no problems are expected and it should just work! Given that you’re using the correct configuration, of course 🙂 And here, since managed cloud services are becoming increasingly popular, we’ve now made it pretty easy on the pgwatch2 side. pgwatch2 has some preset configurations tuned for various engines! In the case of Google Cloud, an example YAML configuration entry (assuming a file-based installation) should use an according preset config named “gce”.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

- unique_name: gce_mon dbtype: postgres # use postgres-continuous-discovery here if want to monitor all Dbs, # optionally according to a regex pattern host: 1.2.3.4 # paste your real IP/hostname here port: 5432 dbname: postgres # monitoring a single DB only here user: pgwatch2 password: # actual password is in the .pgpass file stmt_timeout: 5 # seconds preset_metrics: gce is_enabled: true sslmode: require |

After that's in place, we can test start the pgwatch2 metrics collector and see if it looks good:

|

1 2 3 4 5 6 7 8 |

$ pgwatch-daemon -c /etc/pgwatch2/config/instances.yaml --datastore=postgres –pg-metric-store- conn-str=”postgresql://pgwatch2@localhost:5432/pgwatch2_metrics” 2021/05/06 14:10:05 WARN main: --metrics-folder path not specified, using /etc/pgwatch2/metrics 2021/05/06 14:10:06 ERRO MetricGathererLoop: Failed to fetch metric data for [gce_mon:stat_statements_calls]: pq: relation 'pg_stat_statements' does not exist 2021/05/06 14:10:06 ERRO MetricGathererLoop: Failed to fetch metric data for [gce_mon:stat_statements]: pq: function get_stat_statements() does not exist |

Hmm, some errors. Well, it doesn’t look good for a “preset config”, you might think. But in this case, it's actually expected for a totally fresh and pristine instance. Since Postgres is not actually 100% pre-configured for exhaustive out-of-the-box monitoring, a couple of small moves are required. These are covered by the next chapter.

???? If you’d like to see more details on these errors, or in general see what pgwatch2 is actually doing, then set the --verbosity parameter to info level. There’s also a debug level, but that’s meant rather for bug tracking/developing. It will overflow you with information.

Basically, we need to do 2 things. The first one is a “must have” for all Postgres databases that could get into performance issues at some point, or where we need some visibility of what users are actually executing more often. The 2nd one is actually more of a “nice to have”. However, I always enable it on at least semi-modern hardware. Without it, you’re fine. It's just that some preset Grafana dashboards provided by the pgwatch2 project will look a bit more empty, no biggie.

For most managed services, the extension itself is already activated and present in shared_preload_libraries - if not, you most definitely are allowed to activate it, as it’s the single most useful extension for performance troubleshooting and comes bundled with Postgres by default. Note that you need to execute the code listed below for all databases that you plan to monitor!

|

1 |

CREATE EXTENSION pg_stat_statements; |

Basically, we need to enable the track_io_timing parameter and set track_functions to at least pl. Note that the all setting is normally not needed unless you plan to optimize some C functions coming in from extensions. For the Google service, this would look something like what you see below. Remember, server configuration parameters/settings are called “flags” on Google Cloud!

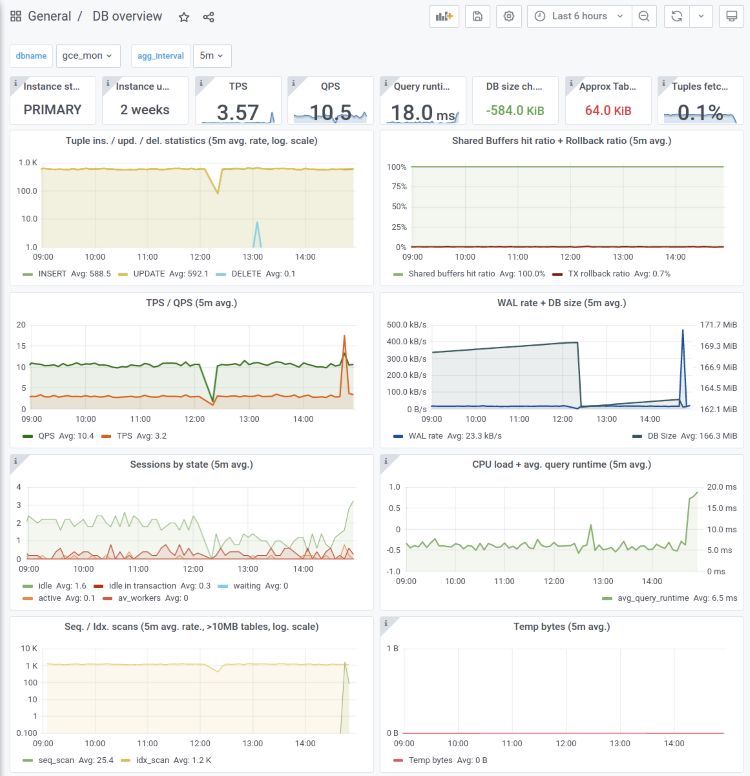

Given that our metrics collection daemon has been running for a while, we can finally fire up Grafana to look at some nice graphs! The usability and features of Grafana together with a bunch of ready-made dashboards to cover all common monitoring needs are one of the main reasons why users like pgwatch2. It makes diving into the metrics quite effortless. Changing the visual side just takes a couple of clicks. That's something that's not possible with the tools provided by the cloud vendors!

Note that when starting from scratch, you need to import the pgwatch2 dashboards into Grafana. This can be done manually “per dashboard”, or also with an “import all” script, exemplified in the documentation here.

Basically, the majority of dashboards should work as when monitoring a local setup. Some dashboards like the “Systems Stats” will be unusable and empty because we cannot create PL/Python helpers on the managed server to extract CPU load, etc.

Also, instead of the standard “DB overview” dashboard that most often serves as a starting point when reports of weird database behavior come in, you might prefer using the “DB overview unprivileged” dashboard. It's compiled exactly for such cases where the metrics agent might not have full access. In this case, we only show metrics that are always there, even for plain users with only a CONNECT privilege. It looks like the following:

To wrap it all up! As we saw, pgwatch2 can be configured to work Google Cloud SQL with only a few modifications. It should actually be similarly easy with all other flavors of PostgreSQL. And compared to service provider instrumentation, it shines! Especially in that, it provides many more dashboards (around 30) to cover all possible problem areas. It also allows you to easily define new custom metrics via SQL. That means they can also come from the business domain. So you can have some nice sales counters right together with your critical database metrics.

The peculiarities of using popular managed cloud services with pgwatch2 are also documented in a separate section. Check that out here for additional hints and tips if you're planning to give pgwatch2 a go for your cloud instance.

Leave a Reply