Table of Contents

When processing a big result set in an interactive application, you want to paginate the result set, that is, show it page by page. Everybody is familiar with that from the first web search on. You also get a button to scroll to the next page, and you get a total result count. This article shows the various options for pagination of a result set and their performance. It also discusses the problem of the total result count.

Let's create a table with enough time series data:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

CREATE UNLOGGED TABLE data ( id bigint GENERATED ALWAYS AS IDENTITY, value double precision NOT NULL, created timestamp with time zone NOT NULL ); /* make the example repeatable */ SELECT setseed(0.2740184); INSERT INTO data (value, created) SELECT random() * 1000, d FROM generate_series( TIMESTAMP '2022-01-01 00:00:00 UTC', TIMESTAMP '2022-12-31 00:00:00 UTC', INTERVAL '1 second' ) AS d(d); /* add a primary key */ ALTER TABLE data ADD PRIMARY KEY (id); /* set hint bits, create visibility map, gather statistics */ VACUUM (ANALYZE) data; |

The query that we are interested in is:

|

1 2 3 4 |

SELECT value, created FROM data WHERE value BETWEEN 0 AND 10 ORDER BY created; |

The best index for the query (at least on my machine) is a two-column index that allows PostgreSQL to use an index-only scan that supports the ORDER BY clause:

|

1 2 |

CREATE INDEX data_created_value_idx ON data (created, value); |

That index is good for fetching the first rows quickly, but it also is the best index for fetching the whole result set.

OFFSET and LIMITWe want to get the result set in chunks of 50 rows. Using OFFSET and LIMIT, we can do that as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

/* the first page */ SELECT value, created FROM data WHERE value BETWEEN 0 AND 10 ORDER BY created LIMIT 50; /* page number 100 */ SELECT value, created FROM data WHERE value BETWEEN 0 AND 10 ORDER BY created OFFSET 4950 LIMIT 50; |

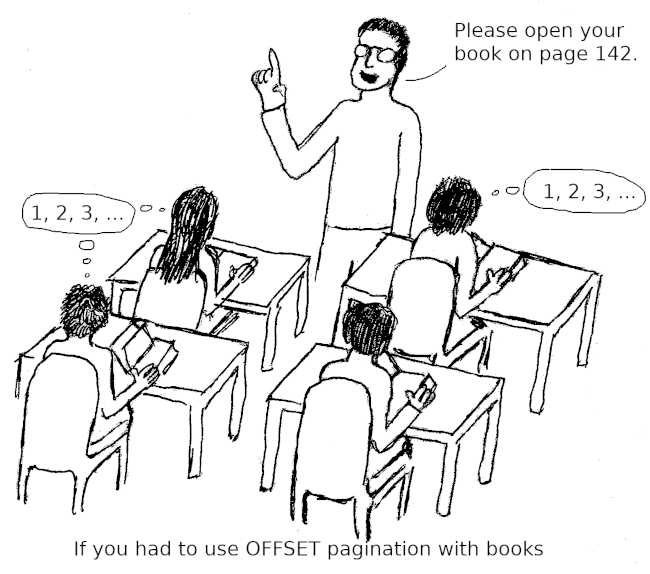

OFFSET and LIMITOFFSET and LIMITThere is one main advantage of this technique: it is simple and can be used with all queries. Also, as long as you only look at the first few pages, it can perform pretty well.

WITH HOLD cursorsCursors are the natural way to fetch result sets in chunks. However, normal cursors only work in the context of a single transaction. Therefore, normal cursors are not useful for pagination, because it is a very bad idea to have user interaction while a transaction is open: not only will a long transaction hold table locks for a very long time (which could block DDL or TRUNCATE statements), but it will also block the progress of autovacuum, which can lead to table bloat.

But you can use a WITH HOLD cursor. Such a cursor fetches the complete result set at the end of the transaction and materializes it on the server:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

START TRANSACTION; /* * Don't use SCROLL unless you need to scroll backwards, * for example to get the total count (see below). */ DECLARE c SCROLL CURSOR WITH HOLD FOR SELECT value, created FROM data WHERE value BETWEEN 0 AND 10 ORDER BY created; /* this will calculate the whole result set */ COMMIT; |

Now it is simple to fetch an arbitrary page:

|

1 2 3 |

/* get page number 100 */ MOVE ABSOLUTE 4950 IN c; FETCH 50 FROM c; |

Don't forget to close the cursor when you are done:

|

1 |

CLOSE c; |

It is a smart idea to fetch the first page before committing the transaction, because that COMMIT materializes the result set and can take a long time. During that time, the client can already render the first result page, and the user can look at it.

WITH HOLD cursorsOFFSET and LIMITWITH HOLD cursorsWITH HOLD cursors, since they are bound to the database connectionThis is the most advanced way to paginate the result set. It requires that we fetch the id column as well. The first page is fetched with:

|

1 2 3 4 5 |

SELECT id, value, created FROM data WHERE value BETWEEN 0 AND 10 ORDER BY created, id LIMIT 50; |

We have to remember the values for id and created from the last row of the page. Then we can fetch the next page with

|

1 2 3 4 5 6 |

SELECT id, value, created FROM data WHERE value BETWEEN 0 AND 10 AND (created, id) > ('2022-01-01 01:27:35+01', 5256) ORDER BY created, id LIMIT 50; |

where 2022-01-01 01:27:35+01 and 5256 are the values we remembered from the previous page. We need id as a tie-breaker in case there are multiple rows with the same value of created.

To make keyset pagination efficient, we need to create a special index that supports the second WHERE condition:

|

1 2 |

CREATE INDEX data_keyset_idx ON data (created, id, value); |

Keyset pagination would be fast enough without including value in the index, but I add it to get an index-only scan, so that the performance comparison with the other methods is fair.

OFFSET and LIMITThe following results were measured for the example in the beginning. I measured the response time on the client side via a local connection (average of five runs). In addition, I used EXPLAIN (ANALYZE, BUFFERS) to determine how many buffers the query had to process. All data were cached in shared buffers, and “random_page_cost” was set to 1.1.

| page 1 | page 10 | page 100 | |

|---|---|---|---|

OFFSET/LIMIT |

1.16 ms 25 buffers |

4.42 ms 184 buffers |

15.36 ms 1915 buffers |

WITH HOLD cursor |

642.28 ms (1.41 ms without COMMIT)120507 buffers |

0.34 ms

0 buffers |

0.51 ms

0 buffers |

| keyset pagination | 1.40 ms 30 buffers |

1.35 ms 31 buffers |

1.39 ms 24 buffers |

Pagination with OFFSET and LIMIT wins for the first page (perhaps because it has to fetch one column less), but keyset pagination is better if you have to fetch more pages. Cursor pagination can only compete if you COMMIT asynchronously, while the user is watching the first page. However, it is faster than the other methods for subsequent pages, since the result set is already calculated.

So far, we have seen that there are smart ways to paginate a query result. However, there is no smart way to get the total result count. You have to calculate the complete result set, then count it. The impact of that varies depending on the pagination method chosen:

OFFSET and keyset pagination, an extra expensive query is necessary. If only the first few pages are retrieved, the query to calculate the total result set count is usually more expensive than all other queries together.WITH HOLD cursor pagination, the whole result set is calculated in the beginning, so the result set count comes for free:

|

1 2 |

/* the result will be 'MOVE 314711' */ MOVE ALL IN c; |

No matter how you do it, calculating the whole result set is slow. I have seen many cases where the count(*) queries to calculate the total result set count were the most expensive queries in an application's workload. Therefore, my recommendations are:

EXPLAIN (FORMAT JSON) SELECT 1 FROM /* your query */” rather than “SELECT count(*) FROM /* your query */”. Then you can extract the row count estimate from the EXPLAIN output. See my article on counting rows for details. Remember that Google doesn't display exact counts either!When paginating results, you can choose between OFFSET/LIMIT, WITH HOLD cursors and keyset pagination. Each of these methods has its benefits and disadvantages, and you will have to decide which one is best in your case. Consider that displaying a total result set count is quite expensive, so avoid it whenever possible.

Hello Laurenz,

greate article, thanks.

A disavantage of keyset pagination is, you must known the values of last

row in then previous page. Random page selection is not possible with

it.

Best regard, Frank

Yes, that is not so straightforward. You can skip ahead several pages using

OFFSET, but I guess few people implement that.Is cursor helpful to rereive large dataset from database?

like data filtering with table joins and subqueries that makes the fetch slow?

Perhaps, perhaps not. It depends on the case.

If materializing the cursor at the end of the first transaction takes too long, it is not a good solution.