I am on a spree! After writing about breaking your database and transaction ID wraparound, here I am writing about damage caused by CHECK constraints! Trust me, I don't want to destroy PostgreSQL databases. It's just that this Twitter message was brought to my attention and triggered memories of other reports about CHECK constraints that caused problems.

Table of Contents

CHECK constraint correct?The documentation warns that there are rules you have to play by when using CHECK constraints:

PostgreSQL assumes that

CHECKconstraints' conditions are immutable, that is, they will always give the same result for the same input row. This assumption is what justifies examiningCHECKconstraints only when rows are inserted or updated, and not at other times.

That makes sense. Otherwise, PostgreSQL would have to check the condition whenever anything in the database changes (or when time has passed), which is not an option. However, PostgreSQL does not enforce that the constrained expression be IMMUTABLE.

IMMUTABLE not enforced in CHECK constraint expressions?PostgreSQL normally doesn't leave requirements like that to the discretion of the user. For example, trying to use a function that is not IMMUTABLE in an index definition causes the error:

|

1 |

ERROR: functions in index expression must be marked IMMUTABLE |

So why does PostgreSQL not enforce that for CHECK constraints? The reason is that there are valid use cases for CHECK constraints where the expression is not IMMUTABLE in the literal sense (but the constraint is). This is even required by the SQL standard. To quote ISO/IEC 9075-2:2003, chapter 11, verse 9:

<check constraint definition> ::= CHECK <left paren> <search condition> <right paren>5) The <search condition> shall simply contain a <boolean value expression> that is retrospectively deterministic.

Earlier on, the standard explains:

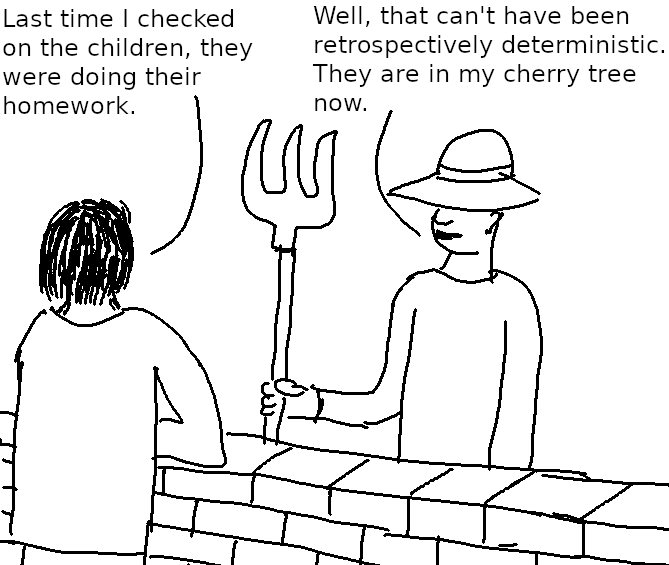

A retrospectively deterministic <boolean value expression> has the property that if it is True at one point time, then it is True for all later points in time if re-evaluated for the identical SQL-data by an arbitrary user with the identical set of privileges. The precise definition is found in Subclause 6.34, “<boolean value expression>”.

I'll spare you the “precise” definition of “retrospectively deterministic”. It is enough to bring tears to your eyes and is a prime example of how an attempt to formalize an idea can go wrong. What you can distill from it is that the standard committee had something like this in mind:

|

1 |

CHECK (colname < current_timestamp - INTERVAL '1' DAY) |

This is not an IMMUTABLE expression (current_timestamp can have a different value tomorrow), but certainly a reasonable CHECK constraint. Any row that satisfies that condition now will also satisfy it in the future.

UPDATEs with a bad CHECK constraintImagine we have a table

|

1 2 3 4 |

CREATE TABLE data ( id bigint PRIMARY KEY, lirum text NOT NULL ); |

and we have a table to commemorate deleted rows

|

1 2 3 4 5 6 |

CREATE TABLE history ( id bigint NOT NULL, archived_at timestamp with time zone NOT NULL, row text NOT NULL, view_count integer DEFAULT 0 NOT NULL ); |

that is populated by a trigger

|

1 2 3 4 5 6 7 8 9 10 11 12 |

CREATE FUNCTION archive() RETURNS trigger LANGUAGE plpgsql AS $$BEGIN INSERT INTO history (id, archived_at, row) VALUES (OLD.id, current_timestamp, OLD::text); RETURN NULL; END;$$; CREATE TRIGGER archive AFTER DELETE ON data FOR EACH ROW EXECUTE FUNCTION archive(); |

We want to create a CHECK constraint that enforces that the string has the correct format. So we define

|

1 2 3 |

/* never mind the IS NOT NULL, the type cast is the test */ ALTER TABLE history ADD CONSTRAINT row_correct CHECK (row::data IS NOT NULL); |

Let's add and delete some data:

|

1 2 3 4 |

INSERT INTO data (id, lirum) VALUES (1, 'some data'); DELETE FROM data WHERE id = 1; |

Sure enough, there is now a row in history:

|

1 2 3 4 5 6 |

TABLE history; id │ archived_at │ row │ view_count ════╪═══════════════════════════════╪═════════════════╪════════════ 1 │ 2023-01-26 07:26:07.460679+01 │ (1,'some data') │ 0 (1 row) |

At some later time, we need to create a new column for our table:

|

1 |

ALTER TABLE data ADD larum text NOT NULL; |

Next time we look at the history table and want to increase view_count, we get:

|

1 2 3 4 5 6 |

UPDATE history SET view_count = view_count + 1 WHERE id = 1 RETURNING id, archived_at, row; ERROR: malformed record literal: '(1,'some data')' DETAIL: Too few columns. |

What happened? By adding a column to the table data, we also changed the composite data type of the same name. That way, we broke “retrospective determinism”, because the data no longer match the changed data type. Even though we didn't change the checked value at all, the new row no longer satisfies the CHECK constraint.

A better solution for this requirement would have been a trigger. You can configure the trigger to check the data only once, when the row is inserted. It is also possible to define the trigger so that it checks the data whenever the row is updated, but only if “row” is modified.

CHECK constraintFor the sake of the example, let's consider a rental car management system:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

CREATE TABLE vehicle ( id bigint PRIMARY KEY, model text NOT NULL, seats smallint NOT NULL ); CREATE TABLE client ( id bigint PRIMARY KEY, name text NOT NULL, group_size smallint NOT NULL ); /* for the exclusion constraint */ CREATE EXTENSION IF NOT EXISTS btree_gist; CREATE TABLE rented ( vehicle_id bigint REFERENCES vehicle NOT NULL, client_id bigint REFERENCES client NOT NULL, from_to daterange NOT NULL, PRIMARY KEY (vehicle_id, client_id), EXCLUDE USING gist (from_to WITH &&, vehicle_id WITH =) ); |

Now it is not a good idea to rent out a small car to a big group, and we know that it is a good idea to enforce constraints in the database. So we want to have a constraint that keeps us from assigning a car to a client if the group size exceeds the number of seats in the car:

|

1 2 3 4 5 6 7 |

ALTER TABLE rented ADD CHECK ( (SELECT vehicle.seats FROM vehicle WHERE vehicle.id = rented.vehicle_id) >= (SELECT client.group_size FROM client WHERE client.id = rented.client_id) ); ERROR: cannot use subquery in check constraint |

What an annoying limitation! This shows us that PostgreSQL indeed places some limits on what we are allowed to do in a CHECK constraint. Fortunately we can work around it with functions:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

CREATE FUNCTION get_seats(bigint) RETURNS smallint BEGIN ATOMIC SELECT seats FROM vehicle WHERE id = $1; END; CREATE FUNCTION get_group_size(bigint) RETURNS smallint BEGIN ATOMIC SELECT group_size FROM client WHERE id = $1; END; ALTER TABLE rented ADD CHECK (coalesce(get_seats(vehicle_id), 0) >= coalesce(get_group_size(client_id), 0)); |

Great! That's much more readable anyway!

Let's verify that this works as intended:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

INSERT INTO vehicle (id, model, seats) VALUES (1, 'Mercedes Vito', 9), (2, 'Audi TT', 4); INSERT INTO client (id, name, group_size) VALUES (100, 'Albe', 5), (101, 'McDuck', 1); INSERT INTO rented (vehicle_id, client_id, from_to) VALUES (2, 100, '[2022-07-01,2022-07-14]'); ERROR: new row for relation 'rented' violates check constraint 'rented_check' DETAIL: Failing row contains (2, 100, [2022-07-01,2022-07-15)). INSERT INTO rented (vehicle_id, client_id, from_to) VALUES (1, 100, '[2022-07-01,2022-07-14]'); |

Looks good!

We perform database backups using

|

1 |

pg_dump -F c -f dumpfile cars |

On the dark day of data loss, when we try to restore our backup, we will get an unexpected error message:

|

1 2 3 4 5 |

pg_restore -d cars dumpfile pg_restore: error: COPY failed for table 'rented': ERROR: new row for relation 'rented' violates check constraint 'rented_check' DETAIL: Failing row contains (1, 100, [2022-07-01,2022-07-15)). CONTEXT: COPY rented, line 1: '1 100 [2022-07-01,2022-07-15)' pg_restore: warning: errors ignored on restore: 1 |

PostgreSQL dumps tables in alphabetic order to be deterministic. While it dumps most constraints in the end to speed up data loading and prevent integrity problems, it considers CHECK constraints to be part of the table definition and dumps them in the beginning. That is fine, since the expressions in CHECK constraints must be retrospectively deterministic and depend only on the row itself. Unfortunately, our cute constraint does not satisfy that requirement. PostgreSQL creates the tables, then restores the data for client, rented and vehicle in that order. So when rented is loaded, vehicle is still empty, get_seats returns 0 and the constraint fails.

Again, the correct solution would have been a trigger that throws an error if the condition is violated. While constraints have to be fulfilled all the time, triggers only fire at certain events. A dump contains trigger definitions at the end, and the triggers are not fired at all when the dump is restored.

If we want to make sure that the condition is always fulfilled, we can go a different way: we could add a copy of seats and group_size to rented and include these columns in the FOREIGN KEY constraints to make sure they are always identical. Then the CHECK constraint only has to compare columns of rented, and the problem is gone.

We saw two examples of how a badly defined CHECK constraint can break a database. The restore failure was particularly unpleasant. Sadly, these are not academic cases, and there have been reports about restore failures on the mailing lists. So make sure that your CHECK constraints are defined correctly, even if PostgreSQL cannot enforce that.

Apart from that, we have learned the pretentious phrase “retrospectively deterministic”, which may draw admiration or a shake of the head, depending on how gullible the audience is.

The source of this error is not CHECK constraint itself but unsafe datatype convertion used in CHECK constraint expression. You have used the datatype convertion with user-defined type, the datatype was altered, and now your expression fails. But it will fail in any place - in generated column, functional index expression, and even in simple query.

Quite correct. This can cause problems in other situations as well. Changing a user-defined data type is dangerous.